The mobile phases consisted of 100% water (solvent A), 100% acetonitrile containing 0.1% formic acid (solvent B), and 100% isopropanol with 0.1% formic acid (solvent C). Serum samples were separated from blood by using serum separation tubes (BD Medical, NJ, US). Serum PINP and CTX-1 were measured by Rat/Mouse PINP EIA kit (IDS, UK) and RatLaps CTX-1 EIA kit (IDS, UK) following the manufacturer’s protocol. This approach combines the benefits of both methods, reducing the variance of updates compared to SGD while maintaining some stochasticity to escape local minima. Batch size refers to the number of training examples used in one iteration on training process. Andrew Ng provides a good discussion of this and some visuals in his online coursera class on ML and neural networks.

How to determine the optimal values for Epoch, Batch Size, Iterations

They explain that small batch size training may introduce enough noise for training to exit the loss basins of sharp minimizers and instead find flat minimizers that may be farther away. Second, large batch training achieves worse minimum validation losses than the small batch sizes. For example, batch size 256 achieves a minimum validation loss of 0.395, compared to 0.344 for batch size 32. While small batch sizes can offer faster convergence and improved exploration of the parameter space, they may also introduce noise and instability into the training process. The metabolites derived from 16S predicted pathways were annotated and compared to the LC-MS fecal metabolome (Table S5,S6).

Introduction to Deep Learning and AI Training

This “sweet spot” usually depends on the dataset and the model at question. The reason for better generalization is vaguely attributed to the existence to “noise” in small batch size training. Because neural network systems are extremely prone overfitting, the idea is that seeing many small batch size, each batch being a “noisy” representation of the entire dataset, will cause a sort of “tug-and-pull” dynamic.

Code, Data and Media Associated with this Article

- Small batch sizes offer several advantages in the training of machine learning models.

- A larger batch size trains faster but may result in the model not capturing the nuances in the data.

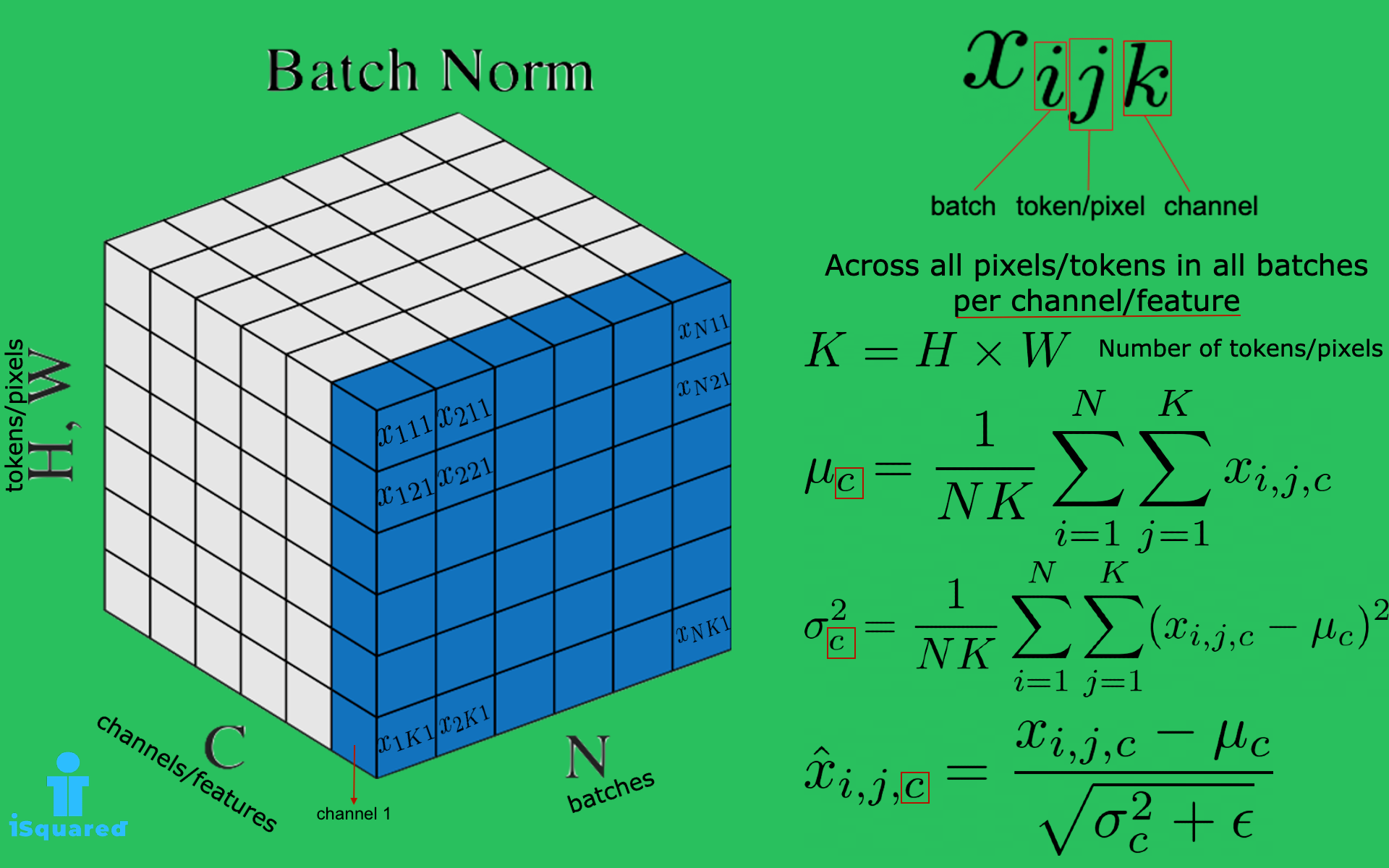

- Moreover, employing strategies such as learning rate adjustment, batch normalization, and systematic experimentation can help mitigate the impact of batch size on training and improve model performance.

- The number of iterations can have an impact on the accuracy and computational efficiency of the training process, and it is another important hyperparameter to tune when training deep learning models.

- XY, JY, JH, SN, RC, AH, and RH carried out experiments and collected data.

From each gray-scale image, a histogram of graylevel numbers was generated. Two parameters were evaluated from the histograms including mode and full width at half maximum (FWHM). Mode represents the most common degree of mineralization in the bone (peak of the histogram) and FWHM indicates the heterogeneity in mineralization. Data reproducibility was checked by scanning the same sample in different imaging sessions and obtaining identical data. A custom MATLAB code (Mathworks, MA, US) was used for quantifying matrix porosity from the graylevel qbSE images.

Age-dependent divergence in microbial ecosystem between the young and old male mice

We also see in figure 11 that this is true across different layers in the model. The top 10 variables for component 1 of sPLS-DA model were selected for loading plot. Cohort 4 (Fig. 1e) was set up identically to cohort 2 (Fig. 1c), but the duration of colonization was extended to 8 months. Too few epochs can result in underfitting, where the model is unable to capture the patterns in the data. On the other hand, too many epochs can result in overfitting, where the model becomes too specific to the training data and is unable to generalize to new data. Computational speed is simply the speed of performing numerical calculations in hardware.

The purple arrow shows a single gradient descent step using a batch size of 2. The blue and red arrows show two successive gradient descent steps using a batch size of 1. The black arrow is the vector sum of the blue and red arrows and represents the overall progress the model makes in two steps of batch size 1. While not explicitly shown in the image, the hypothesis is that the purple line is much shorter than the black line due to gradient competition. In other words, the gradient from a single large batch size step is smaller than the sum of gradients from many small batch size steps. We found that parallelization made small-batch training slightly slower per epoch, whereas it made large-batch training faster — for batch size 256, each epoch took 3.97 seconds, down from 7.70 seconds.

We still observe a slight performance gap between the smallest batch size (val loss 0.343) and the largest one (val loss 0.352). Some have suggested that small batches have a regularizing effect because they introduce noise into effect of batch size on training the updates that helps training escape the basins of attraction of suboptimal local minima [1]. However, the results from these experiments suggest that the performance gap is relatively small, at least for this dataset.

If the iterations are set too high, the model may never converge and training will never be completed. If the iterations are set too low, the model may converge too quickly and result in suboptimal performance. The ideal number of epochs for a given training process can be determined through experimentation and monitoring the performance of the model on a validation set. Once the model stops improving on the validation set, it is a good indication that the number of epochs has been reached.